Slides -- Bioinformatics FAQ Bot

FAQ Bot

San Diego Python Monthly Meetup by Hobson Lane, Travis Harper Dec 19, 2019

QA Bot

- WikiQA

- ANSQ

Thank you Travis!

- Analyzing WikiQA

- Architecting a Transformer

Search-based QA

Find a question-answer pair in DB Translate question to a statement, search Wikipedia

Scalable Search: O(log(N))

- Discrete index

- Sparse BOW vectors

Synonyms & Typos

- Stemming

- Lemmatizing

- Spelling Corrector

- BPE (bytepair encoding)

Examples

- Full text search in Postgres

- Trigram indexes in Databases

- Ellastic Search

Prefilter

- Page rank

- Sparse TFIDF vectors

Examples

- Full Text (keywords): O(log(N))

- TFIDF (Ellastic Search): O(log(N))

- TFIDF + Semantic Search: O(L)

Academic Search Approachs

- Edit distance

Knowledg-based QA

Extract information

Human Initiative

Bot Initiative

Language Models

- Bag of Words

- TFIDF

- Word2Vec

- Universal Sentence Encoder

Bag of Words Vectors (TF)

Term frequency (count):

bow_vectors = [

{'hello': 1, 'world': 1, '!': 1}

{'goodbye': 1 'cruel': 2, 'world': 1, '.': 3}

]

TFIDF (TF / DF)

bow_vectors = [

{'hello': 1, 'world': 1, '!': 1}

{'goodbye': 1 'cruel': 2, 'world': 1, '.': 3}

]

Parul’s QA Bot

flag=True

print("ROBO: My name is Robo. I will answer your queries about Chatbots. If you want to exit, type Bye!")

while(flag==True):

user_response = input()

...

else:

flag=False

print("ROBO: Bye! take care..")

WordVec

Single layer neural net (equivalent to PCA):

- predict next word

- predict previous word

- predict word 2 positions ago

- …

WordVec Example

nlp = spacy.load('')

doc1 = nlp("Hello world!")

df = pd.DataFrame([term.vector for term in doc],

index=[term.text for term in doc])

0 1 ... 298 299

Hello 2.52e-01 0.10 ... -5.19e-01 0.34

world -6.68e-03 0.22 ... 1.41e-03 0.10

! -2.66e-01 0.34 ... -6.29e-02 0.16

DocVec Example

doc1 = nlp("Hello world!")

pd.DataFrame(df.sum(), columns=['doc1']).T

0 1 ... 298 299

doc1 -0.02 0.66 ... -0.58 0.6

DocVec Example

doc2 = nlp('Goodbye cruel cruel world ...')

pd.DataFrame(df2.sum(), columns=['doc2']).T

0 1 ... 298 299

doc2 -1.4 0.48 ... -0.52 -0.94

SpaCy Rocks!

docvec2 = nlp('Goodbye cruel cruel world ...').vector

pd.DataFrame(docvec2, columns=['doc2']).T

0 1 ... 298 299

doc2 -0.28 0.1 ... -0.1 -0.19

Universal Sentence Encoder (USE)

Deep LSTM recurrent neural networks trained to:

- Answer questions

- Summarize sentences

- Guess missing words

Next steps

- Remember state

- Smarter conversation manager

- “Game play” with goal states (user satisfaction)

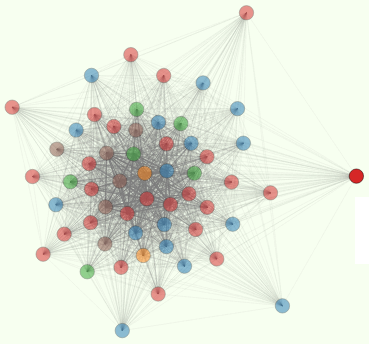

Dialog Tree

Props to Alfred Francis

Written on December 19, 2019