B-Machine Learning

The “B” isn’t for Bot, it’s for “Benefit”, as-in B-Corporation. What do B-Corps have to do with Machine Learning?

Machine learning “Bots” have been affecting culture and making moral decisions for decades. But the exponential growth in “morally sensitive” decisions made by machines makes it increasingly critical that we incorporate diversity of thought and people into the process of building the machines.

The control problem is a big deal. How do we ensure that Machines behave morally, intelligently. Well, if you think about it, controlling machines will be no more difficult that controlling corporations. After all Corporations run on software (by-laws, processes, procedures, certifications) and act in a world full of “learning” feedback (financial success, legal lawsuits, criminal law enforcement, social protests). So we can have success in the Machine Learning world to the same degree we had success if forming the “rules of the game” for corporations in the 20th century.

Do you think corporations and government agencies are acting in an ethical, morally responsible, way? I dare say most of you would find examples on both ends of the spectrum, from Enron to VW and Uber to the Red Cross or Red Crescent. By the way, The Red Cross and Red Crescent would both have a lot to answer for if they had to sit before a diverse panel of morality critics. It’s certainly possible to operate an international corporation in a completely immoral way, harming millions of peoples lives while raking in the monetary booty of your theft, all without incurring any legal penalties from any of the national or international organizations that regulate your actions. It’s also possible to build corporations whose sole purpose is increasing the greater good in the world. So my question is not a very fare question.

A better question is what is the sum total of all the good corporations do in this world? But that question is difficult for different reasons. Measuring impact to humanity and total “good” is very difficult. Is it more important to advance human culture and technology or to prevent suicides of your employees working in third world sweat shops. But some actions/decisions are better than others, so there’s definitely a distance metric or norm of “good” that can be developed for this multidimensional “goodness” vector. So lets just punt and assume that you have some such metric in your head and we could build those metrics into our machines, if we chose to.

Real world problems

- corporations use shell companies to accomplish illegal and environmentally harmful things

- corporations and governments hire contractors to do morally questionable things

- corporations use recruiting agencies to be able to implement “affirmative action” initiatives and other “biased” hiring practices that would be illegal to implement within their corporate policy

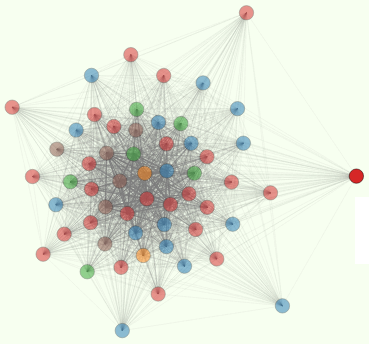

The only way even this modest degree of moral responsibility has become a part of corporate law is because of the diversity of people allowed to influence the laws governing corporations and the personnel running the government agencies. So we need to think about translating this experience to Data Science and Machine Learning technology development efforts.

We’re starting to enable machines to make these decisions previously reserved for large organizations with human beings at the helm. Now those humans can hide behind their bots and blame bad actions on the software, the data, or even the engineers.

- missiles can (should) decide when to self-destruct on their way to a target based on the presence of civilians

- drones and satellite tasking algorithms can (should) take into account collateral damage minimization surveys when they decide which targets to spend time on. balancing this with the primary objective of winning the conflict and protecting allied combatants

- recruiting software can (should) decide to bubble up a diversity of candidates to the top of a resume stack

But what about just letting data speak the truth? Aren’t bots more objective and less subject to cognitive biases than human? But with machine learning we humans are training them. They “optimize” us according to whatever cost function we give them. What if our cost function doesn’t include “externalities”? What if it only includes near term revenue this quarter? To be truly “total good” an algorithm must optimize for a much broader, longer-term metric like the advancement of humanity. Or even better, the advancement of life. Or even better, the advancement of complexity and diversity of information content in the universe… OK that’s sounds like I’m asking a bit much, but I think the machines can handle it…